For those who have already played Pokémon GO and understand Augmented Reality (AR), you may skip to the next paragraph. For those who have not or do not, Pokémon GO features real time insertion of animated images (creatures called Pokémon) into a live video stream that comes through the camera lens on your smart phone. Point your camera lens at a the sidewalk in front of you and, if the software expects a Pokémon to be in that geography, you’ll see it on your screen even though you don’t see it when you look directly at the sidewalk.

Outside of Pokémon GO, you can find a number of examples of excellent AR implementations on contemporary TV, especially in sports broadcasts. That yellow first down line you see in American football games? Yup, that’s great AR. It moves with the field when the camera pans, tilts, or zooms and it disappears behind players when they walk over it. It really does look like there’s a yellow line painted on the field. But if you think about it, you know the line is not really there. No one in the stadium can see it – only people watching the live TV broadcast.

You know those ads on the wall behind home plate during The World Series? Yup. Those, too, are artificially inserted. In fact, depending on where you are in the world, you’ll see different ads. And they exhibit the same characteristics as the yellow first down line: they stick with the field when the camera pans, tilts, or zooms and they disappear behind anyone who walks in front of them.

The math and the video technology behind those two examples of AR would blow your mind. Watch for more AR this summer during the Olympics. You’ll notice that all these AR implementations exhibit the same three characteristics (in order of technical difficulty):

1. A graphic image, sometimes animated, is inserted into a live video stream.

2. The image seems to move with its “real world” surroundings, whether the real world surroundings consists of an American football field, a wall in a baseball stadium, or (in the Olympics) the bottom of a pool.

3. The inserted image disappears behind any object that passes between the camera lens and the place where the inserted image is supposed to be – including batters, catchers, and umpires in a baseball game or referees and players in a football game.

But what about Pokémon GO?

The AR software used in Pokémon GO is very cool and exciting, but it’s also pretty basic. It only really manages the first of the three features mentioned above, and it’s the easiest one. It also partially manages the second characteristic. The Pokémon are inserted into the video stream when you point your camera in the direction of the Pokémon, but the Pokémon doesn’t move with the “real world” very smoothly. If you tilt, pan, or zoom your camera, the Pokémon moves around fairly wildly and doesn’t give a very good illusion of actually being part of the “real world” surrounding it.

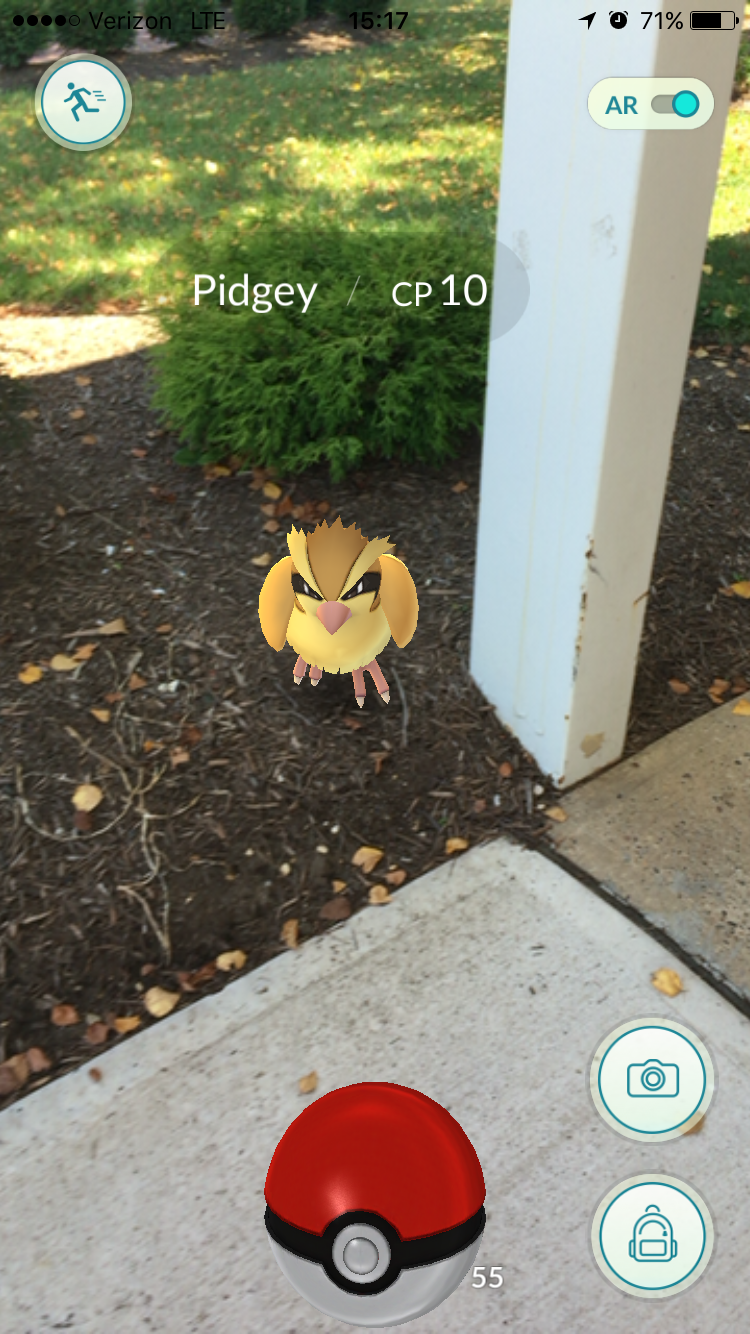

The third and most difficult characteristic of AR, of course, isn’t implemented at all in Pokémon GO. If you find a Pokémon and, while viewing it through your camera lens, pass your hand between the lens and the Pokémon, the “real world” surrounding the Pokémon will disappear behind your hand, but the Pokémon will not (see the accompanying image). The same thing applies if a friend, a car, or a passing dog comes between your phone and the Pokémon: the world disappears behind the new object but the Pokémon does not.

The third and most difficult characteristic of AR, of course, isn’t implemented at all in Pokémon GO. If you find a Pokémon and, while viewing it through your camera lens, pass your hand between the lens and the Pokémon, the “real world” surrounding the Pokémon will disappear behind your hand, but the Pokémon will not (see the accompanying image). The same thing applies if a friend, a car, or a passing dog comes between your phone and the Pokémon: the world disappears behind the new object but the Pokémon does not.

There’s no doubt about it: Pokémon GO has brought augmented reality into everyday life and made it fun for millions of people. As video processing power on mobile devices improves and more games take advantage of AR (which they will) the AR implementation will improve and we’ll see better integration of the artificial images with the surrounding environment – and they’ll begin to disappear behind intervening objects. For now, although the Pokémon GO implementation is far from ideal, “Progress over Perfection.”