The fundamental mission around which everything a library does is the accumulation and dissemination of information for its community.

A secondary objective of a library is to provide a certain amount of entertainment.

We can debate the details of these assertions at another time, but if you grant me that “accumulation and dissemination of information” is at least a fundamental mission of all libraries, we can continue.

We can also assume that people need information and entertainment to survive an emergency, especially a pandemic. They need information so they know what to do – how to respond to the pandemic – and they need to have confidence in that information so they do not panic. In addition, community members need entertainment so they can survive emotionally as well as physically.

Finally, let us take as a given that in a pandemic, library buildings will close. In the current pandemic, many states have ordered libraries to close their doors. Many libraries in other states have voluntarily curtailed in-building operations.

Does closing the building mean that libraries have to cease operations? Of course not. That’s absurd. Libraries can still offer much of what makes libraries libraries through online services. It takes some adaptation, to be sure, but it can be done.

I have listed below some ideas of what a library can do while it is still “open for business” even while the library building is closed.

1. Communicate library activities to the community. This can and should be done aggressively by all means available.

-

- The cheapest and easiest way to let the community know what a library is doing is to post it on the library’s web site! Use basic marketing principles around placement and messaging (do not, for example, have a message that says “the library is closed until further notice” right next to a message that says “come to these online meetings.” From the patron’s point of view, that’s very confusing. The building may be closed, but the library is open. It’s that simple.

- Facebook is a great way to get word out to much of the community. For a few dollars (literally about $10), it is possible to put announcements that “The Building is Closed, But the Library is Still Open” in front of thousands of community members who are stuck at home browsing Facebook. Direct them to your Facebook page and your web site where they can find out all the other details.

- The visual nature of Instagram makes it a tool good for generating interest in and excitement about library activities.

- Email is also cheap. Send a note to members about changes to policy (due dates have been extended, blocked cards have been unblocked, etc) and in the same email, tell them about all the things going on at the library. Get patrons to sign up for a monthly newsletter that provides updates on past and upcoming activities. Send out a vibrant, low text, high-imagery newsletter sharing past and upcoming events.

- Newspapers are still being published, online and in print. Make the library a story and get the newspaper to cover it (both online and in print!).

- Have the mayor and other public officials mention in their announcements that the library is still functioning online and encourage community members to visit the library’s home page for information about resources and activities that are available.

2. Move book clubs and other groups online. Don’t cancel anything! GoToMeeting, WebEx, and Zoom are just three of multiple online meeting services available to libraries that make virtual meetings possible. I belong to a literary group at my neighborhood library. We don’t read specific books each month, we talk about different genres and aspects of literature. The average age of members may well be around 60, but everyone quickly learned how to participate using a virtual meeting tool and everyone has been grateful to have the human contact during the stay-at-home period. It’s possible to chat while knitting in a virtual meeting. ESL sessions can be conducted online. Even chess is possible. D&D would be easy. Perhaps board games would be a challenge.

3. Add (online) events. Take advantage of online resources to add events, especially for kids. Pandemics – any emergency requiring people to stay home – are especially hard on kids and parents with small children. The more events you have that can keep kids entertained, the happier the kids will be – and the happier the parents will be that they have an active and supportive library. I know a librarian who organized an art group for teens that produced spectacular imagery. Another library added reader’s advisory services via FB in which a librarian would interact with multiple patrons looking for books to read.

4. Expand existing online services. When people are stuck at home, unable to go to theaters or the gym, they look to the internet for services. Some need more variety than what is available through paid services. Others simply can’t afford them. Most libraries have access to magazines, ebooks, and streaming video. These can all be expanded. Team up with other libraries through the county or state or the ALA to negotiate for greater availability of titles and less restrictive limits on the number and length of time digital assets can be accessed.

5. Expand internet access services. Libraries are often the primary source for internet access for students and adults who do not have high speed internet access at home. They made need access to broadband internet access through the library just to access the library’s online services, but they are also likely to need it for school or work, not to mention entertainment. Even when the library is closed, the library can facilitate internet access by

-

- Extending WiFi services deep into the parking lot. If patrons have access to a car, they can sit in the parking lot and use the internet.

- Lending WiFi hot spots. Obviously, anything that is being lent by the library requires the object be moved from the library to the patron, which can be an infection transmission mechanism. However, if the sterilization procedures can be worked out, libraries that can lend WiFi hot spots to patrons will do a great favor to high school students preparing for college (or college students trying to wrap up courses for graduation).

6. Provide community updates. People want and need to know what’s going on. Cable news and even regional newspapers are pretty good at covering state and national news. But what about local news and information that provide actionable knowledge to residents? Often it comes from many sources. Often community members only get part of the news they need. The library should consolidate it on their web site. No commentary. No editing. Just post it.

-

- Updates from the mayor’s office.

- Notices and updates from the police department.

- Updates from the county health department.

- Anything else that applies directly to the decisions being made by community members such as:

- Testing locations.

- Lists of businesses providing curbside pickup.

- News about non-library community activities.

- Stories about good-deeds in the community.

- Stories about scams or people abusing the crisis.

7. Post information on the disease (or other emergency). Find basic, factual, non-Wikipedia sources of information about the disease, how it spreads, where it came from, what it does inside our bodies, etc. Libraries should NOT attempt to digest this information and write their own material about it. Instead, create a reading list of magazine and journal articles, books, videos (lots of videos), and webinars, all of which should be available through the library, with links for ready access. Librarians should use all of their information literacy skills to sift through the available information to find the most current, reliable information. Be sure that the information is in a form the library’s community can readily digest – the latest peer reviewed journal article in Lancet on the way the virus affects protein replication in lung cells may be useful to a practicing physician, but it will not help an accountant or a mom understand what they are facing. The library may want to break out the information according to age group so parents can share (and learn) with their children.

Remember, it’s all about the patrons. Don’t forget who your patrons are. If you have a large Spanish or Mandarin speaking community, be sure to offer these services in Spanish or Chinese as well. In many ways, these communities are the most shut off from reliable information and will depend upon the services of the library more than majority language communities.

Stay in touch with the community. While the building is closed, direct person-to-person contact with the community may be curtailed. The library staff, especially the executive director and the department heads, need to stay in touch with members of the community to identify needs the library can fill and or things that it could do better.

In a pandemic, as in any emergency, access to current, reliable information is essential for productive debate and coordinated, unified public response. Make the library what it is supposed to be: A center for reliable information for the community it serves.

Author’s note: This blog post is dated in mid-May 2020. However, it draws extensively on conversations held over the last five years in which I asked, “What is the role of a library in an emergency (such as a blizzard)?” Beginning a year ago, influenced by years of exposure to Bill Gates’ warnings about the likelihood of a global pandemic, I refined that question to “What would the role of a library be in a pandemic?” In early February of 2020, as reports of the Chinese COVID-19 outbreak spreading to other countries began appearing in the news, I proposed to the library where I worked that we begin a basic information campaign around flu avoidance (washing hands, coughing into sleeves, etc). Then in early March of 2020, as reports of the virus in Washington State and New York City appeared in the news, I asked my pandemic question of a group of library leadership graduate students at Rutgers University. In the Rutgers discussion and in the conversations last year about the role of libraries in a pandemic, I proposed many of the ideas presented here. Of course, I got lots of thoughtful feedback then and I welcome more thoughtful comments here now.

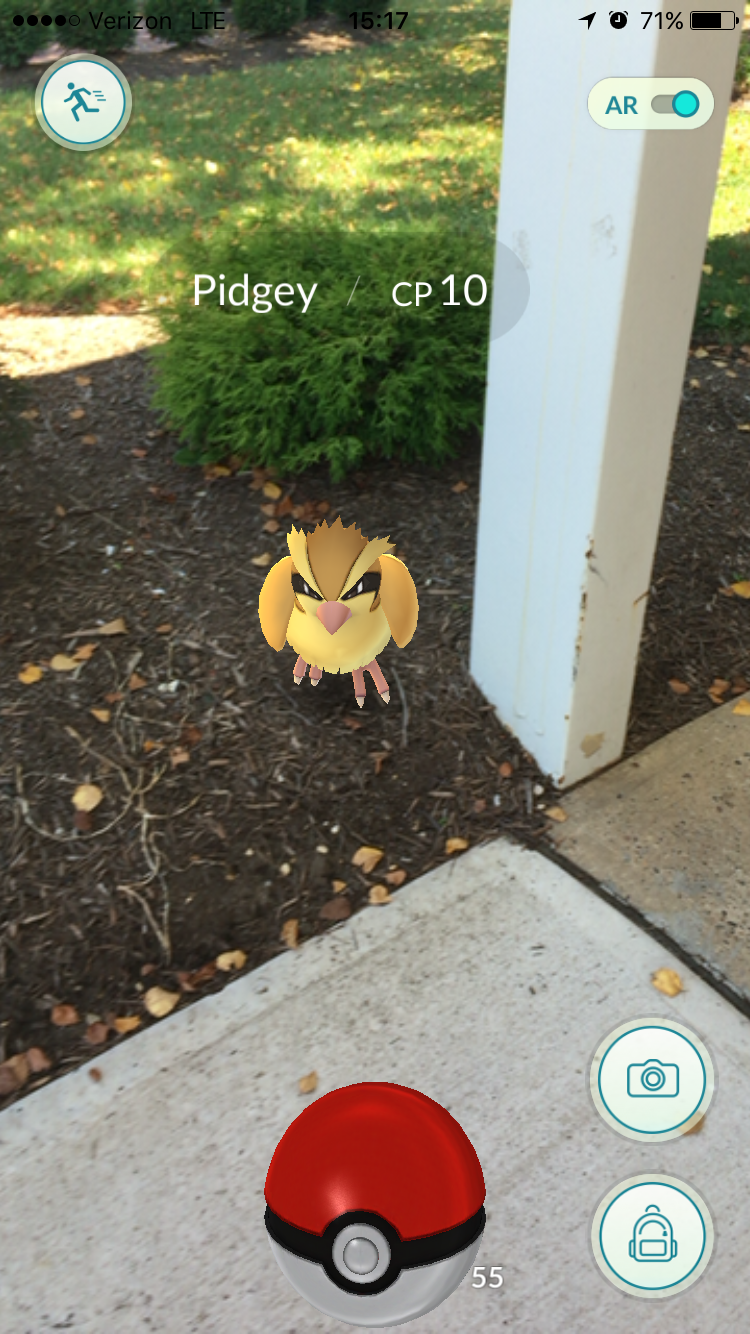

The third and most difficult characteristic of AR, of course, isn’t implemented at all in Pokémon GO. If you find a Pokémon and, while viewing it through your camera lens, pass your hand between the lens and the Pokémon, the “real world” surrounding the Pokémon will disappear behind your hand, but the Pokémon will not (see the accompanying image). The same thing applies if a friend, a car, or a passing dog comes between your phone and the Pokémon: the world disappears behind the new object but the Pokémon does not.

The third and most difficult characteristic of AR, of course, isn’t implemented at all in Pokémon GO. If you find a Pokémon and, while viewing it through your camera lens, pass your hand between the lens and the Pokémon, the “real world” surrounding the Pokémon will disappear behind your hand, but the Pokémon will not (see the accompanying image). The same thing applies if a friend, a car, or a passing dog comes between your phone and the Pokémon: the world disappears behind the new object but the Pokémon does not. The best example of augmented reality is

The best example of augmented reality is  connections to the real world and replaces the real world with a digitally produced artificial world. Second Life, Cloud Party, and games such as World of Warcraft are virtual worlds that can be accessed using an ordinary computer, but if you’ve ever been exhilarated by a ride on Disney’s Star Tours, you’ve experienced immersive virtual reality, which stimulates all your senses.

connections to the real world and replaces the real world with a digitally produced artificial world. Second Life, Cloud Party, and games such as World of Warcraft are virtual worlds that can be accessed using an ordinary computer, but if you’ve ever been exhilarated by a ride on Disney’s Star Tours, you’ve experienced immersive virtual reality, which stimulates all your senses.

had to work with someone who, every time he was asked to do something, would get this look on his face that said, “Hmmm…. Is this in my job description?” That reactionturns up frequently when people are asked to think about ways to make changes that will improve someone else’s (or even their own) circumstances. On the surface, the work done by an executive assistant in the finance department can seem removed from the company’s product or service offering as seen from the view of the customer, but every role in the company impacts cost and quality, which are inherent to the customer experience. Therefore, change, and the acceptance of questions that lead to change, is every employee’s responsibility.

had to work with someone who, every time he was asked to do something, would get this look on his face that said, “Hmmm…. Is this in my job description?” That reactionturns up frequently when people are asked to think about ways to make changes that will improve someone else’s (or even their own) circumstances. On the surface, the work done by an executive assistant in the finance department can seem removed from the company’s product or service offering as seen from the view of the customer, but every role in the company impacts cost and quality, which are inherent to the customer experience. Therefore, change, and the acceptance of questions that lead to change, is every employee’s responsibility.

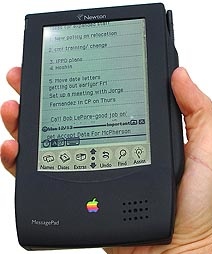

Compare Apple’s Newton with its iPhone successor. Take a look at the pictures of the two devices with the hands normalized to (roughly) the same size. Consider the dimensions of the two instruments. Now look at the screen layout. Look at the styling of the cases. Think about the way users are expected to interface with the devices (stylus vs fingers). Consider the range of uses (apps) available for each and the ease with which users can add or remove functionality (and the ways that Apple makes money from the apps).

Compare Apple’s Newton with its iPhone successor. Take a look at the pictures of the two devices with the hands normalized to (roughly) the same size. Consider the dimensions of the two instruments. Now look at the screen layout. Look at the styling of the cases. Think about the way users are expected to interface with the devices (stylus vs fingers). Consider the range of uses (apps) available for each and the ease with which users can add or remove functionality (and the ways that Apple makes money from the apps).